Challenges of Machine Learning

Introduction:

Machine learning is a powerful tool for extracting insights from data, but it also comes with a number of challenges. In Generally, the problems can be classified into two main areas, first is bad algorithms and bad data. Let’s discuss in depth at these challenges, starting with data-related issues.

Insufficient Quantity of Training Data

Imagine you are teaching to a child for identify an apple. You point on an apple and say this is an apple, and after a few repetitions, the child learns to recognize apples of different colors and shapes. However, machine learning is not that type simple. Many algorithms require large amounts of data to work effectively. For simple tasks, you may need thousands of examples, while more complex tasks, such as image or speech recognition may require millions of examples.

The need for large datasets occurs from the fact that machine learning algorithms learn patterns and relationships from data. For instance, in natural language processing or image classification, the algorithms must be exposed to a wide variety of examples to generalize well. Without sufficient data, the model might fail to capture the underlying patterns, leading to poor performance.

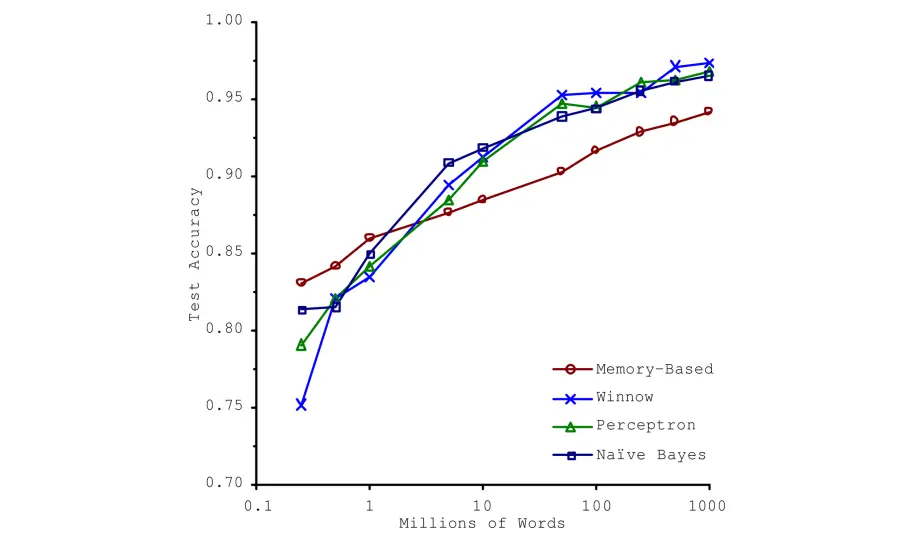

The Unreasonable Effectiveness of Data

A landmark paper by Microsoft researchers Michele Banko and Eric Brill in 2001 demonstrated that with enough data, even relatively simple machine learning algorithms could perform nearly as well as more complex ones on tasks like natural language disambiguation. The findings suggested that spending time and resources on collecting a large dataset might be more beneficial than investing in complex algorithm development.

Peter Norvig and his colleagues further popularized this idea in 2009, asserting that data often outweighs the importance of sophisticated algorithms. This concept, known as the “unrealistic effectiveness of data”, emphasizes the critical role of data quality and quantity in the success of machine learning models. However, it is important to recognize that while large datasets are valuable, they are not always easy to obtain, and the importance of robust algorithms should not be completely discounted.

Nonrepresentative Training Data

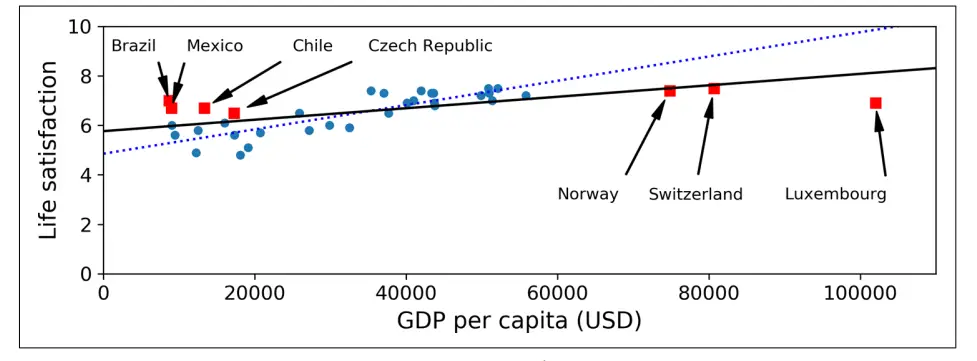

To build a model that generalizes well, the training data must be representative of the scenarios the model will encounter in real-world applications. For instance, if you are training a machine learning model to predict the life satisfaction that based on the economic indicators and your dataset only includes data from rich countries the model might not perform well when this model applied to the poorer countries.

A non-representative training set can lead to false predictions, as the model might learn patterns that are specific to the training data but do not apply broadly. For example, if a dataset used for training a health diagnosis model lacks of samples from certain demographics and the model might be less effective when applied to those groups.

Examples of Sampling Bias

Sampling bias occurs when certain groups or characteristics are underrepresented in a dataset. A famous example of sampling bias is a survey of the 1936 US presidential election conducted by Literary Digest. Despite the large number of responses, the poll underestimated Landon’s victory over Roosevelt due to sampling bias. The Digest’s sampling method favored wealthy individuals, who were more likely to vote for Landon, leading to inaccurate estimates.

Another example can be found in building a music recommendation system. If you collect training data by searching for funk music on a platform like YouTube, then the results may be go toward popular artists and may not represent the full spectrum of funk music. This types of biases in the training data can lead to a model that does not accurately represent the diversity of styles.

Poor-Quality Data

Data quality is very important for the performance of machine learning models. If the data is full of errors, outliers, or noise then the model will struggle to detect meaningful patterns. For instance, if you are training a model to predict customer churn and your data contains many wrong entries or irrelevant features, the model’s predictions will be less reliable.

Data cleaning is an important part of a data scientist’s job. This process includes identifying and addressing outliers, filling in missing values, and correcting errors. For example, if 5% of your customer data is missing age information, you need to decide whether to ignore this attribute, skip these instances, or fill in the missing values using statistical methods.

Irrelevant Features

The quality of a machine learning model also depends on the features used for training. Irrelevant or unnecessary features can reduce the performance of the model and complicate the learning process. Feature engineering is an important step in machine learning that involves selecting, extracting, and building features to improve the model’s effectiveness.

Feature selection involves choosing the most relevant features from the existing dataset. Feature extraction combines existing features to create new ones that might be more informative. For instance, in a model predicting customer satisfaction, combining individual features like purchase frequency and average transaction value might provide more insight than using each feature separately.

Overfitting the Training Data

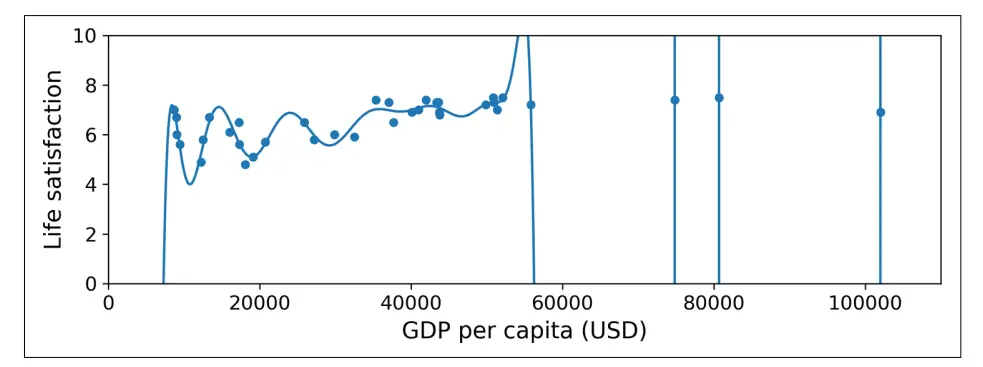

Overfitting occurs when a model performs exceptionally well on the training data but fails to generalize to new, unseen data. This problem arises when the model learns not only the underlying patterns but also the noise in the training data. Overfitting often happens with complex models that have too many parameters relative to the amount of training data.

For example, if you use a high-degree polynomial to fit a model to a dataset, it might fit the training data perfectly but perform poorly on new data. Regularization is a technique used to mitigate overfitting by adding constraints to the model, such as limiting the size of the coefficients in linear regression.

Solutions to Overfitting

To fix overfitting, you can:

- Simplify the model: Choose a model with less parameters or reduce the number of features.

- Collect more data: Increasing the amount of training data can help the model learn more generalized patterns.

- Reduce noise: Clean the training data to remove errors and outliers.

Regularization techniques such as L1 and L2 regularization can also help by adding penalties for large coefficients, thus simplifying the model.

Underfitting the Training Data

Underfitting is the opposite of overfitting. It happen when a model is too simple to hold the basic structure of the data. For instance, a linear model might not be suitable for capturing the complexities of life satisfaction data which maybe require a more complex approach.

To address underfitting, you can:

- Use a More Complex Model: Choose a model with more parameters or a completely different type of model.

- Improve Feature Engineering: Create more relevant features or enhance existing ones.

- Reduce Constraints: Solve regularization constraints to allow more flexibility in fitting the data to the model.

Stepping Back: The Big Picture

To summarize, machine learning involves making systems learn from data rather than explicit programming. It encompasses various types of systems and algorithms, each with its own strengths and weaknesses. The success of a machine learning project depends on:

- Data Quality and Quantity: Ensuring that the training data is representative, large enough, and of high quality.

- Model Complexity: Balancing the model’s complexity to avoid overfitting and underfitting.

- Evaluation and Tuning: Using validation techniques to assess model performance and fine-tuning hyperparameters.

Testing and Validating

Evaluating how well a model generalizes to new cases is crucial. A common approach is to split the data into a training set and a test set. The model is trained on the training set and evaluated on the test set to estimate its generalization error.

It’s important to avoid using the test set multiple times during model selection and hyperparameter tuning, as this can lead to overly hopeful estimates of performance. Instead, use a separate validation set to compare different models and select the best one.

Hyperparameter Tuning and Model Selection

When choosing between different models or tuning hyperparameters, one must use a validation set that is representative of the data coming into model production. Holdout validation involves using a portion of the training set as a validation set to evaluate different models. Once the best model is selected, it is retrained on the full training set and tested on a different test set.

Iterative cross-validation is another technique that involves using multiple validation sets to obtain a more accurate measure of a model’s performance. This approach can be computationally expensive but provides a more reliable estimate of how well the model will perform on new data.

Data Mismatch

Sometimes, the data used for training might not perfectly represent the data encountered in production. For example, if you are developing a mobile app to recognize flowers and use web-scraped images for training, the app might perform poorly if the web images differ significantly from those taken with a mobile camera.

To address data mismatch, ensure that your validation and test sets are representative of the production data. You might need to preprocess the training data to better align with the production data or use a train-dev set to evaluate the model’s performance on data that closely resembles the production environment.

Conclusion

Machine learning is a complex field with numerous challenges. Ensuring that your data is sufficient, representative, and of high quality is crucial. Additionally, selecting the right model and tuning it appropriately are key to building effective machine learning systems. By addressing these challenges thoughtfully and systematically, you can enhance the performance and reliability of your machine learning models.

Thanks for reading!

If you enjoyed this article and would like to receive notifications for my future posts, consider subscribing . By subscribing, you’ll stay updated on the latest insights, tutorials, and tips in the world of data science.

Additionally, I would love to hear your thoughts and suggestions. Please leave a comment with your feedback or any topics you’d like me to cover in upcoming blogs. Your engagement means a lot to me, and I look forward to sharing more valuable content with you.

Subscribe and Follow for More