Chapter 1 – Part 2 of Our Machine Learning Adventure

Introduction

Welcome back to our journey of machine learning! In our last installment, we laid the groundwork by exploring the basics of machine learning, focusing on supervised and unsupervised learning. Today, we will study the interesting field of semi-supervised learning. This hybrid approach bridges the gap between supervised and unsupervised learning, bringing new possibilities and efficiencies to data analysis. We will also discuss reinforcement learning, batch, and online learning, and the difference between instance-based and model-based learning.

Understanding Semisupervised Learning

Semi-supervised learning is a unique technique that uses both labeled and unlabeled data. Unlike supervised learning, which relies entirely on labeled data, or unsupervised learning, which operates entirely on unlabeled data, semi-supervised learning finds balance. This method is particularly useful in situations where obtaining labeled data is expensive or time-consuming, but unlabeled data is readily available.

The main advantage of semi-supervised learning is the ability to improve learning accuracy with less labeled data. For example, imagine a situation in which a company has a large dataset of customer interactions but only a small fraction is labeled. Semi-supervised learning can use unlabeled data to enhance the learning process, leading to better predictive models.

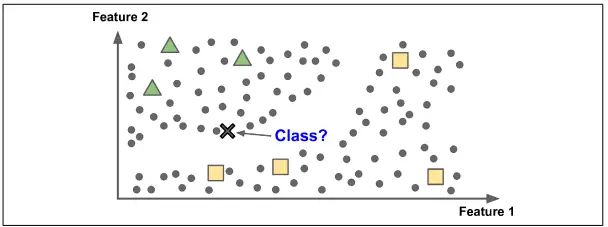

Above figure shows that Semi-supervised learning with two classes (triangles and squares): the unlabeled examples (circles) help classify a new instance (the cross) into the triangle class

rather than the square class, even though it is closer to the labeled squares

Key Techniques in Semi-supervised Learning

- Self-training: In this approach, the model is initially trained with a small amount of labeled data. It then uses this model to predict labels for the unlabeled data, which is iteratively added to the training set. This method is simple but powerful, allowing the model to improve over time.

- Co-training: This technique involves training two models on different views of the same data. Each model’s predictions on the unlabeled data are used to train the other model, resulting in mutual improvement. Co-training is particularly effective when the data can be split into two distinct and informative views.

- Generative models: These models aim to understand the data distribution and generate new data points. By modeling the distribution of both labeled and unlabeled data, generative models can provide valuable insights and improve classification tasks.

- Graph-based methods: These methods represent data as graphs, where nodes are data points and edges represent similarities. By propagating labels through the graph, these methods can effectively exploit the structure of data to enhance learning.

Additional Learning Paradigms

- Reinforcement Learning:

- Unlike traditional machine learning methods, reinforcement learning involves learning by interacting with the agent’s environment, receiving rewards or punishments based on its actions. This paradigm is essential for tasks that require sequential decision making and has applications in robotics, game playing, and autonomous systems.

- Reinforcement Learning is a very different beast. The learning system, called an agent in this context, can observe the environment, select and perform actions, and get rewards in return.

- Batch and Online Learning:

- Batch Learning: In this method, the model is trained simultaneously on the entire dataset. This is efficient for static datasets but can be computationally intensive.

- Online Learning: In contrast, online learning updates the model incrementally as new data arrives. This approach is ideal for dynamic environments where data is constantly flowing, allowing the model to adapt over time.

- Instance-Based Versus Model-Based Learning:

- Instance-Based Learning: This approach, also known as lazy learning, remembers training examples and uses them directly to make predictions. A classic example is K-Nearest Neighbors.

- Model-Based Learning: Also known as eager learning, this approach builds a predictive model based on training data. The model is then used to make predictions on new data. Examples include linear regression and neural networks.

Advantages and Challenges

Semi-supervised learning offers several advantages. This reduces the need for large labeled datasets, reducing the time and cost associated with data labeling. It also achieves high accuracy by leveraging a large pool of data. However, there are challenges to consider. The performance of semi-supervised learning techniques can be sensitive to the quality of the initial labeled data. In addition, the risk of propagation of incorrect labels can affect the performance of the model.

Case Studies and Examples

Consider the healthcare industry, where labeled data are often limited due to privacy concerns and the high cost of expert commentary. Semi-supervised learning can significantly improve predictive models using the large amount of unlabeled data available, leading to better diagnostic tools and personalized treatments.

In finance, semi-supervised learning can improve fraud detection systems. By using a smaller set of unlabeled fraudulent transactions and a larger set of unlabeled transactions, these systems can more accurately identify fraudulent behavior.

Tools and Libraries

Many tools and libraries support semi-supervised learning. Popular libraries include Scikit-Learn, which offers a variety of semi-supervised learning algorithms, TensorFlow, and PyTorch, which provide the flexibility to implement custom models. Getting started with these tools involves installing the libraries and exploring their documentation to understand their capabilities.

from sklearn.semi_supervised import SelfTrainingClassifier

from sklearn.ensemble import RandomForestClassifier

# Example code snippet for self-training

base_classifier = RandomForestClassifier()

self_training_model = SelfTrainingClassifier(base_classifier)

self_training_model.fit(X_labeled, y_labeled)Future of Semi-supervised Learning

The future of semi-supervised learning is promising. As research progresses, new methods and techniques are being developed to address current limitations and enhance performance. Integration of semi-supervised learning with other machine learning paradigms such as reinforcement learning has great potential. Furthermore, advances in computing power and the availability of large datasets will continue to drive innovation in the field.

Conclusion

Semi-supervised learning is at the forefront of machine learning innovation, providing a powerful approach to solving data shortage problems and improving model performance. By understanding and use these techniques, we can unlock new opportunities and efficiencies across industries.

Call to Action

We encourage you to share your thoughts and questions in the comments section below. Subscribe to our blog to stay updated with the latest insights and developments in machine learning. Stay tuned for the next part of our machine learning adventure, where we explore more advanced topics and techniques. Happy learning!

Thanks for reading!

If you enjoyed this article and would like to receive notifications for my future posts, consider subscribing . By subscribing, you’ll stay updated on the latest insights, tutorials, and tips in the world of data science.

Additionally, I would love to hear your thoughts and suggestions. Please leave a comment with your feedback or any topics you’d like me to cover in upcoming blogs. Your engagement means a lot to me, and I look forward to sharing more valuable content with you.

Subscribe and Follow for More